AutoMM 文本 - 快速入门¶

MultiModalPredictor 可以解决数据为图像、文本、数值或分类特征的问题。

为了开始,我们首先演示如何使用它来解决仅包含文本的问题。我们选择了两个经典的NLP问题进行演示:

在这里,我们将NLP数据集格式化为数据表,其中特征列包含文本字段,标签列包含数值(回归)/分类(分类)值。表中的每一行对应一个训练样本。

%matplotlib inline

import numpy as np

import warnings

import matplotlib.pyplot as plt

warnings.filterwarnings('ignore')

np.random.seed(123)

情感分析任务¶

首先,我们考虑斯坦福情感树库(SST)数据集,该数据集由电影评论及其相关情感组成。 给定一个新的电影评论,目标是预测文本中反映的情感(在这种情况下是一个二分类,如果评论传达积极意见则标记为1,否则标记为0)。让我们首先加载并查看数据, 注意标签存储在名为label的列中。

from autogluon.core.utils.loaders import load_pd

train_data = load_pd.load('https://autogluon-text.s3-accelerate.amazonaws.com/glue/sst/train.parquet')

test_data = load_pd.load('https://autogluon-text.s3-accelerate.amazonaws.com/glue/sst/dev.parquet')

subsample_size = 1000 # subsample data for faster demo, try setting this to larger values

train_data = train_data.sample(n=subsample_size, random_state=0)

train_data.head(10)

| sentence | label | |

|---|---|---|

| 43787 | very pleasing at its best moments | 1 |

| 16159 | , american chai is enough to make you put away... | 0 |

| 59015 | too much like an infomercial for ram dass 's l... | 0 |

| 5108 | a stirring visual sequence | 1 |

| 67052 | cool visual backmasking | 1 |

| 35938 | hard ground | 0 |

| 49879 | the striking , quietly vulnerable personality ... | 1 |

| 51591 | pan nalin 's exposition is beautiful and myste... | 1 |

| 56780 | wonderfully loopy | 1 |

| 28518 | most beautiful , evocative | 1 |

上述数据恰好以Parquet格式存储,但你也可以直接从CSV文件或其他等效格式load()数据。

虽然这里我们从AWS S3云存储加载文件,但这些文件也可以是您机器上的本地文件。

加载后,train_data只是一个Pandas DataFrame,

其中每一行代表一个不同的训练示例。

Training¶

为了确保本教程快速运行,我们仅使用1000个训练示例的子集调用fit(),并将其运行时间限制在大约1分钟。

为了在您的应用程序中获得合理的性能,建议您设置更长的time_limit(例如1小时),或者根本不指定time_limit(time_limit=None)。

from autogluon.multimodal import MultiModalPredictor

import uuid

model_path = f"./tmp/{uuid.uuid4().hex}-automm_sst"

predictor = MultiModalPredictor(label='label', eval_metric='acc', path=model_path)

predictor.fit(train_data, time_limit=180)

/home/ci/opt/venv/lib/python3.11/site-packages/mmengine/optim/optimizer/zero_optimizer.py:11: DeprecationWarning: `TorchScript` support for functional optimizers is deprecated and will be removed in a future PyTorch release. Consider using the `torch.compile` optimizer instead.

from torch.distributed.optim import \

=================== System Info ===================

AutoGluon Version: 1.2b20241127

Python Version: 3.11.9

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Tue Sep 24 10:00:37 UTC 2024

CPU Count: 8

Pytorch Version: 2.5.1+cu124

CUDA Version: 12.4

Memory Avail: 28.40 GB / 30.95 GB (91.8%)

Disk Space Avail: 187.78 GB / 255.99 GB (73.4%)

===================================================

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

2 unique label values: [1, 0]

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during Predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression', 'quantile'])

AutoMM starts to create your model. ✨✨✨

To track the learning progress, you can open a terminal and launch Tensorboard:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst

```

Seed set to 0

GPU Count: 1

GPU Count to be Used: 1

GPU 0 Name: Tesla T4

GPU 0 Memory: 0.43GB/15.0GB (Used/Total)

Using 16bit Automatic Mixed Precision (AMP)

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

HPU available: False, using: 0 HPUs

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params | Mode

---------------------------------------------------------------------------

0 | model | HFAutoModelForTextPrediction | 108 M | train

1 | validation_metric | MulticlassAccuracy | 0 | train

2 | loss_func | CrossEntropyLoss | 0 | train

---------------------------------------------------------------------------

108 M Trainable params

0 Non-trainable params

108 M Total params

435.573 Total estimated model params size (MB)

4 Modules in train mode

225 Modules in eval mode

Epoch 0, global step 3: 'val_acc' reached 0.56000 (best 0.56000), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=0-step=3.ckpt' as top 3

Epoch 0, global step 7: 'val_acc' reached 0.62500 (best 0.62500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=0-step=7.ckpt' as top 3

Epoch 1, global step 10: 'val_acc' reached 0.70500 (best 0.70500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=1-step=10.ckpt' as top 3

Epoch 1, global step 14: 'val_acc' reached 0.84500 (best 0.84500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=1-step=14.ckpt' as top 3

Epoch 2, global step 17: 'val_acc' reached 0.89000 (best 0.89000), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=2-step=17.ckpt' as top 3

Epoch 2, global step 21: 'val_acc' reached 0.82500 (best 0.89000), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=2-step=21.ckpt' as top 3

Epoch 3, global step 24: 'val_acc' reached 0.89000 (best 0.89000), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=3-step=24.ckpt' as top 3

Epoch 3, global step 28: 'val_acc' reached 0.88000 (best 0.89000), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=3-step=28.ckpt' as top 3

Epoch 4, global step 31: 'val_acc' was not in top 3

Epoch 4, global step 35: 'val_acc' was not in top 3

Epoch 5, global step 38: 'val_acc' was not in top 3

Epoch 5, global step 42: 'val_acc' was not in top 3

Epoch 6, global step 45: 'val_acc' reached 0.89500 (best 0.89500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=6-step=45.ckpt' as top 3

Epoch 6, global step 49: 'val_acc' reached 0.89500 (best 0.89500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=6-step=49.ckpt' as top 3

Epoch 7, global step 52: 'val_acc' was not in top 3

Epoch 7, global step 56: 'val_acc' reached 0.89500 (best 0.89500), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/epoch=7-step=56.ckpt' as top 3

Epoch 8, global step 59: 'val_acc' was not in top 3

Epoch 8, global step 63: 'val_acc' was not in top 3

Epoch 9, global step 66: 'val_acc' was not in top 3

Time limit reached. Elapsed time is 0:03:00. Signaling Trainer to stop.

Epoch 9, global step 68: 'val_acc' was not in top 3

Start to fuse 3 checkpoints via the greedy soup algorithm.

AutoMM has created your model. 🎉🎉🎉

To load the model, use the code below:

```python

from autogluon.multimodal import MultiModalPredictor

predictor = MultiModalPredictor.load("/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst")

```

If you are not satisfied with the model, try to increase the training time,

adjust the hyperparameters (https://auto.gluon.ai/stable/tutorials/multimodal/advanced_topics/customization.html),

or post issues on GitHub (https://github.com/autogluon/autogluon/issues).

<autogluon.multimodal.predictor.MultiModalPredictor at 0x7fd8a680ce10>

上面我们指定了:名为label的列包含要预测的标签值,AutoGluon应优化其预测以用于准确性评估指标,训练好的模型应保存在automm_sst文件夹中,训练应运行大约60秒。

Evaluation¶

训练后,我们可以轻松地在与训练数据格式相似的单独测试数据上评估我们的预测器。

test_score = predictor.evaluate(test_data)

print(test_score)

{'acc': 0.8956422018348624}

默认情况下,evaluate() 将报告之前指定的评估指标,在我们的示例中为 accuracy。您也可以在调用 evaluate 时指定其他指标,例如 F1 分数。

test_score = predictor.evaluate(test_data, metrics=['acc', 'f1'])

print(test_score)

{'acc': 0.8956422018348624, 'f1': 0.8992248062015504}

Prediction¶

你可以通过调用predictor.predict()轻松地从这些模型中获得预测。

sentence1 = "it's a charming and often affecting journey."

sentence2 = "It's slow, very, very, very slow."

predictions = predictor.predict({'sentence': [sentence1, sentence2]})

print('"Sentence":', sentence1, '"Predicted Sentiment":', predictions[0])

print('"Sentence":', sentence2, '"Predicted Sentiment":', predictions[1])

"Sentence": it's a charming and often affecting journey. "Predicted Sentiment": 1

"Sentence": It's slow, very, very, very slow. "Predicted Sentiment": 0

对于分类任务,您可以要求预测的类别概率而不是预测的类别。

probs = predictor.predict_proba({'sentence': [sentence1, sentence2]})

print('"Sentence":', sentence1, '"Predicted Class-Probabilities":', probs[0])

print('"Sentence":', sentence2, '"Predicted Class-Probabilities":', probs[1])

"Sentence": it's a charming and often affecting journey. "Predicted Class-Probabilities": [2.9311537e-05 9.9997067e-01]

"Sentence": It's slow, very, very, very slow. "Predicted Class-Probabilities": [9.9981374e-01 1.8631402e-04]

我们可以同样轻松地生成整个数据集的预测。

test_predictions = predictor.predict(test_data)

test_predictions.head()

0 1

1 0

2 1

3 1

4 0

Name: label, dtype: int64

Save and Load¶

The trained predictor is automatically saved at the end of fit(), and you can easily reload it.

警告

MultiModalPredictor.load() uses pickle module implicitly, which is known to be insecure. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling. Never load data that could have come from an untrusted source, or that could have been tampered with. Only load data you trust.

loaded_predictor = MultiModalPredictor.load(model_path)

loaded_predictor.predict_proba({'sentence': [sentence1, sentence2]})

Load pretrained checkpoint: /home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/b4fb4356abb94726b15e99639194fe3b-automm_sst/model.ckpt

array([[2.9311537e-05, 9.9997067e-01],

[9.9981409e-01, 1.8595056e-04]], dtype=float32)

你也可以通过调用.save()将预测器保存到任何位置。

new_model_path = f"./tmp/{uuid.uuid4().hex}-automm_sst"

loaded_predictor.save(new_model_path)

loaded_predictor2 = MultiModalPredictor.load(new_model_path)

loaded_predictor2.predict_proba({'sentence': [sentence1, sentence2]})

Load pretrained checkpoint: /home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/569f0508a0154857a57ede9fe1912b14-automm_sst/model.ckpt

array([[2.9311537e-05, 9.9997067e-01],

[9.9981409e-01, 1.8595056e-04]], dtype=float32)

Extract Embeddings¶

您还可以使用训练好的预测器来提取嵌入,将数据表的每一行映射到从该行的中间神经网络表示中提取的嵌入向量。

embeddings = predictor.extract_embedding(test_data)

print(embeddings.shape)

(872, 768)

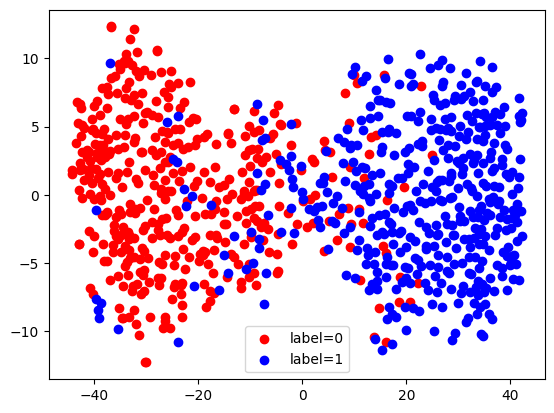

在这里,我们使用TSNE来可视化这些提取的嵌入。我们可以看到有两个簇对应于我们的两个标签,因为这个网络已经被训练来区分这些标签。

from sklearn.manifold import TSNE

X_embedded = TSNE(n_components=2, random_state=123).fit_transform(embeddings)

for val, color in [(0, 'red'), (1, 'blue')]:

idx = (test_data['label'].to_numpy() == val).nonzero()

plt.scatter(X_embedded[idx, 0], X_embedded[idx, 1], c=color, label=f'label={val}')

plt.legend(loc='best')

<matplotlib.legend.Legend at 0x7fd8e0897650>

句子相似度任务¶

接下来,我们使用MultiModalPredictor来训练一个模型,用于评估两个句子在语义上的相似程度。 我们使用语义文本相似性基准数据集进行说明。

sts_train_data = load_pd.load('https://autogluon-text.s3-accelerate.amazonaws.com/glue/sts/train.parquet')[['sentence1', 'sentence2', 'score']]

sts_test_data = load_pd.load('https://autogluon-text.s3-accelerate.amazonaws.com/glue/sts/dev.parquet')[['sentence1', 'sentence2', 'score']]

sts_train_data.head(10)

Loaded data from: https://autogluon-text.s3-accelerate.amazonaws.com/glue/sts/train.parquet | Columns = 4 / 4 | Rows = 5749 -> 5749

Loaded data from: https://autogluon-text.s3-accelerate.amazonaws.com/glue/sts/dev.parquet | Columns = 4 / 4 | Rows = 1500 -> 1500

| sentence1 | sentence2 | score | |

|---|---|---|---|

| 0 | A plane is taking off. | An air plane is taking off. | 5.00 |

| 1 | A man is playing a large flute. | A man is playing a flute. | 3.80 |

| 2 | A man is spreading shreded cheese on a pizza. | A man is spreading shredded cheese on an uncoo... | 3.80 |

| 3 | Three men are playing chess. | Two men are playing chess. | 2.60 |

| 4 | A man is playing the cello. | A man seated is playing the cello. | 4.25 |

| 5 | Some men are fighting. | Two men are fighting. | 4.25 |

| 6 | A man is smoking. | A man is skating. | 0.50 |

| 7 | The man is playing the piano. | The man is playing the guitar. | 1.60 |

| 8 | A man is playing on a guitar and singing. | A woman is playing an acoustic guitar and sing... | 2.20 |

| 9 | A person is throwing a cat on to the ceiling. | A person throws a cat on the ceiling. | 5.00 |

在这些数据中,名为score的列包含数值(我们想要预测的),这些数值是人工标注的每对句子的相似度分数。

print('Min score=', min(sts_train_data['score']), ', Max score=', max(sts_train_data['score']))

Min score= 0.0 , Max score= 5.0

让我们训练一个回归模型来预测这些分数。请注意,我们只需要指定标签列,AutoGluon 会自动确定预测问题的类型和适当的损失函数。再次提醒,你应该增加下面较短的 time_limit 以在你自己的应用中获得合理的性能。

sts_model_path = f"./tmp/{uuid.uuid4().hex}-automm_sts"

predictor_sts = MultiModalPredictor(label='score', path=sts_model_path)

predictor_sts.fit(sts_train_data, time_limit=60)

=================== System Info ===================

AutoGluon Version: 1.2b20241127

Python Version: 3.11.9

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Tue Sep 24 10:00:37 UTC 2024

CPU Count: 8

Pytorch Version: 2.5.1+cu124

CUDA Version: 12.4

Memory Avail: 25.02 GB / 30.95 GB (80.9%)

Disk Space Avail: 186.52 GB / 255.99 GB (72.9%)

===================================================

AutoGluon infers your prediction problem is: 'regression' (because dtype of label-column == float and label-values can't be converted to int).

Label info (max, min, mean, stddev): (5.0, 0.0, 2.701, 1.4644)

If 'regression' is not the correct problem_type, please manually specify the problem_type parameter during Predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression', 'quantile'])

AutoMM starts to create your model. ✨✨✨

To track the learning progress, you can open a terminal and launch Tensorboard:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/851cfffa39e74858a2ec07a1e608564d-automm_sts

```

Seed set to 0

GPU Count: 1

GPU Count to be Used: 1

GPU 0 Name: Tesla T4

GPU 0 Memory: 0.58GB/15.0GB (Used/Total)

Using 16bit Automatic Mixed Precision (AMP)

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

HPU available: False, using: 0 HPUs

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params | Mode

---------------------------------------------------------------------------

0 | model | HFAutoModelForTextPrediction | 108 M | train

1 | validation_metric | MeanSquaredError | 0 | train

2 | loss_func | MSELoss | 0 | train

---------------------------------------------------------------------------

108 M Trainable params

0 Non-trainable params

108 M Total params

435.570 Total estimated model params size (MB)

4 Modules in train mode

225 Modules in eval mode

Epoch 0, global step 20: 'val_rmse' reached 0.59856 (best 0.59856), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/851cfffa39e74858a2ec07a1e608564d-automm_sts/epoch=0-step=20.ckpt' as top 3

Epoch 0, global step 40: 'val_rmse' reached 0.48791 (best 0.48791), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/851cfffa39e74858a2ec07a1e608564d-automm_sts/epoch=0-step=40.ckpt' as top 3

Epoch 1, global step 61: 'val_rmse' reached 0.49064 (best 0.48791), saving model to '/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/851cfffa39e74858a2ec07a1e608564d-automm_sts/epoch=1-step=61.ckpt' as top 3

Time limit reached. Elapsed time is 0:01:06. Signaling Trainer to stop.

Start to fuse 3 checkpoints via the greedy soup algorithm.

AutoMM has created your model. 🎉🎉🎉

To load the model, use the code below:

```python

from autogluon.multimodal import MultiModalPredictor

predictor = MultiModalPredictor.load("/home/ci/autogluon/docs/tutorials/multimodal/text_prediction/tmp/851cfffa39e74858a2ec07a1e608564d-automm_sts")

```

If you are not satisfied with the model, try to increase the training time,

adjust the hyperparameters (https://auto.gluon.ai/stable/tutorials/multimodal/advanced_topics/customization.html),

or post issues on GitHub (https://github.com/autogluon/autogluon/issues).

<autogluon.multimodal.predictor.MultiModalPredictor at 0x7fd8e08f4fd0>

我们再次在独立的测试数据上评估我们训练好的模型的性能。下面我们选择计算以下指标:RMSE、皮尔逊相关系数和斯皮尔曼相关系数。

test_score = predictor_sts.evaluate(sts_test_data, metrics=['rmse', 'pearsonr', 'spearmanr'])

print('RMSE = {:.2f}'.format(test_score['rmse']))

print('PEARSONR = {:.4f}'.format(test_score['pearsonr']))

print('SPEARMANR = {:.4f}'.format(test_score['spearmanr']))

RMSE = 0.70

PEARSONR = 0.8869

SPEARMANR = 0.8871

让我们使用我们的模型来预测几个句子之间的相似度分数。

sentences = ['The child is riding a horse.',

'The young boy is riding a horse.',

'The young man is riding a horse.',

'The young man is riding a bicycle.']

score1 = predictor_sts.predict({'sentence1': [sentences[0]],

'sentence2': [sentences[1]]}, as_pandas=False)

score2 = predictor_sts.predict({'sentence1': [sentences[0]],

'sentence2': [sentences[2]]}, as_pandas=False)

score3 = predictor_sts.predict({'sentence1': [sentences[0]],

'sentence2': [sentences[3]]}, as_pandas=False)

print(score1, score2, score3)

3.9919393 2.9244866 0.99693316

尽管MultiModalPredictor目前支持分类和回归任务,但如果你将它们正确格式化为数据表,它可以直接用于许多NLP任务。请注意,此数据表中可以有许多文本列。请参阅MultiModalPredictor文档以查看所有可用的方法/选项。

与TabularPredictor训练/集成不同类型的模型不同,MultiModalPredictor专注于选择和微调基于深度学习的模型。在内部,它与timm、huggingface/transformers、openai/clip集成作为模型库。

Other Examples¶

You may go to AutoMM Examples to explore other examples about AutoMM.

Customization¶

To learn how to customize AutoMM, please refer to Customize AutoMM.