概率性 RNN¶

在这个笔记本中,我们展示了一个概率性RNN如何与darts一起使用的示例。这种类型的RNN受到了DeepAR的启发,并且几乎与DeepAR相同:https://arxiv.org/abs/1704.04110

[1]:

# fix python path if working locally

from utils import fix_pythonpath_if_working_locally

fix_pythonpath_if_working_locally()

[2]:

%load_ext autoreload

%autoreload 2

%matplotlib inline

[3]:

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

import pandas as pd

import shutil

from sklearn.preprocessing import MinMaxScaler

from tqdm import tqdm_notebook as tqdm

from tensorboardX import SummaryWriter

import matplotlib.pyplot as plt

from darts import TimeSeries

from darts.utils.callbacks import TFMProgressBar

from darts.dataprocessing.transformers import Scaler

from darts.models import RNNModel, ExponentialSmoothing, BlockRNNModel

from darts.metrics import mape

from darts.utils.statistics import check_seasonality, plot_acf

import darts.utils.timeseries_generation as tg

from darts.datasets import AirPassengersDataset, EnergyDataset

from darts.utils.timeseries_generation import datetime_attribute_timeseries

from darts.utils.missing_values import fill_missing_values

from darts.utils.likelihood_models import GaussianLikelihood

from darts.timeseries import concatenate

import warnings

warnings.filterwarnings("ignore")

import logging

logging.disable(logging.CRITICAL)

def generate_torch_kwargs():

# run torch models on CPU, and disable progress bars for all model stages except training.

return {

"pl_trainer_kwargs": {

"accelerator": "cpu",

"callbacks": [TFMProgressBar(enable_train_bar_only=True)],

}

}

变量噪声序列¶

作为一个简单的例子,我们创建一个目标时间序列,该序列是通过将正弦波和高斯噪声序列相加而创建的。为了增加趣味性,高斯噪声的强度也由一个正弦波(具有不同的频率)调制。这意味着噪声的影响会以振荡的方式增强和减弱。我们的想法是测试概率性RNN是否能在其预测中模拟这种振荡的不确定性。

[4]:

length = 400

trend = tg.linear_timeseries(length=length, end_value=4)

season1 = tg.sine_timeseries(length=length, value_frequency=0.05, value_amplitude=1.0)

noise = tg.gaussian_timeseries(length=length, std=0.6)

noise_modulator = (

tg.sine_timeseries(length=length, value_frequency=0.02)

+ tg.constant_timeseries(length=length, value=1)

) / 2

noise = noise * noise_modulator

target_series = sum([noise, season1])

covariates = noise_modulator

target_train, target_val = target_series.split_after(0.65)

[5]:

target_train.plot()

target_val.plot()

[5]:

<Axes: xlabel='time'>

在接下来的内容中,我们训练一个概率性RNN来自回归地预测目标序列,但同时也考虑了噪声成分的调制作为一个已知的未来协变量。因此,RNN知道目标的噪声成分何时严重,但它并不知道噪声成分本身。让我们看看RNN是否能利用这些信息。

[6]:

my_model = RNNModel(

model="LSTM",

hidden_dim=20,

dropout=0,

batch_size=16,

n_epochs=50,

optimizer_kwargs={"lr": 1e-3},

random_state=0,

training_length=50,

input_chunk_length=20,

likelihood=GaussianLikelihood(),

**generate_torch_kwargs(),

)

my_model.fit(target_train, future_covariates=covariates)

[6]:

RNNModel(model=LSTM, hidden_dim=20, n_rnn_layers=1, dropout=0, training_length=50, batch_size=16, n_epochs=50, optimizer_kwargs={'lr': 0.001}, random_state=0, input_chunk_length=20, likelihood=GaussianLikelihood(prior_mu=None, prior_sigma=None, prior_strength=1.0, beta_nll=0.0), pl_trainer_kwargs={'accelerator': 'cpu', 'callbacks': [<darts.utils.callbacks.TFMProgressBar object at 0x2b3386350>]})

[7]:

pred = my_model.predict(80, num_samples=50)

target_val.slice_intersect(pred).plot(label="target")

pred.plot(label="prediction")

[7]:

<Axes: xlabel='time'>

我们可以看到,除了正确预测目标的简单振荡行为外,当噪声成分较高时,RNN在预测中正确地表达了更多的不确定性。

每日能量生产¶

[8]:

df3 = EnergyDataset().load().pd_dataframe()

df3_day_avg = (

df3.groupby(df3.index.astype(str).str.split(" ").str[0]).mean().reset_index()

)

series_en = fill_missing_values(

TimeSeries.from_dataframe(

df3_day_avg, "time", ["generation hydro run-of-river and poundage"]

),

"auto",

)

# convert to float32

series_en = series_en.astype(np.float32)

# create train and test splits

train_en, val_en = series_en.split_after(pd.Timestamp("20170901"))

# scale

scaler_en = Scaler()

train_en_transformed = scaler_en.fit_transform(train_en)

val_en_transformed = scaler_en.transform(val_en)

series_en_transformed = scaler_en.transform(series_en)

# add the day as a covariate (no scaling required as the day is one-hot-encoded)

day_series = datetime_attribute_timeseries(

series_en, attribute="day", one_hot=True, dtype=np.float32

)

plt.figure(figsize=(10, 3))

train_en_transformed.plot(label="train")

val_en_transformed.plot(label="validation")

[8]:

<Axes: xlabel='time'>

[9]:

model_name = "LSTM_test"

model_en = RNNModel(

model="LSTM",

hidden_dim=20,

n_rnn_layers=2,

dropout=0.2,

batch_size=16,

n_epochs=10,

optimizer_kwargs={"lr": 1e-3},

random_state=0,

training_length=300,

input_chunk_length=300,

likelihood=GaussianLikelihood(),

model_name=model_name,

save_checkpoints=True, # store the latest and best performing epochs

force_reset=True,

**generate_torch_kwargs(),

)

[10]:

model_en.fit(

series=train_en_transformed,

future_covariates=day_series,

val_series=val_en_transformed,

val_future_covariates=day_series,

)

[10]:

RNNModel(model=LSTM, hidden_dim=20, n_rnn_layers=2, dropout=0.2, training_length=300, batch_size=16, n_epochs=10, optimizer_kwargs={'lr': 0.001}, random_state=0, input_chunk_length=300, likelihood=GaussianLikelihood(prior_mu=None, prior_sigma=None, prior_strength=1.0, beta_nll=0.0), model_name=LSTM_test, save_checkpoints=True, force_reset=True, pl_trainer_kwargs={'accelerator': 'cpu', 'callbacks': [<darts.utils.callbacks.TFMProgressBar object at 0x2c1c97a00>]})

让我们加载模型在最佳表现状态

[11]:

model_en = RNNModel.load_from_checkpoint(model_name=model_name, best=True)

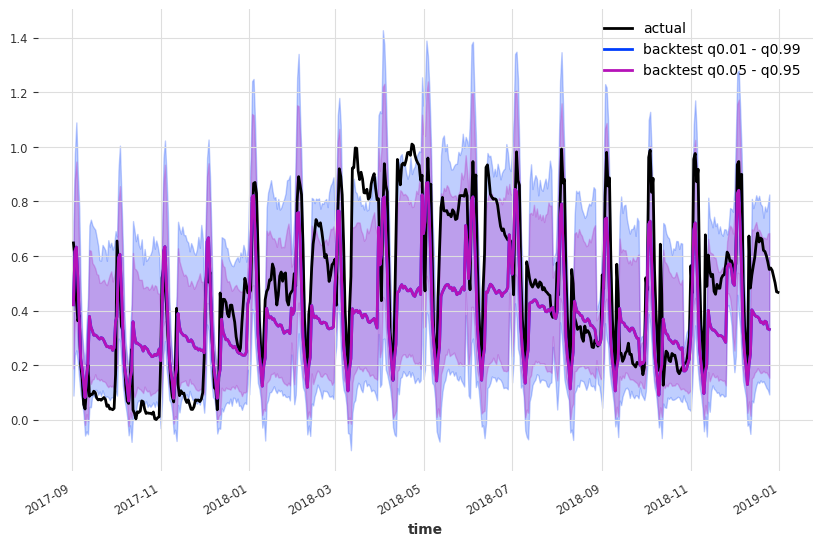

现在我们执行历史预测: - 我们从验证系列的开始进行预测(start=val_en_transformed.start_time()) - 每个预测的长度为 forecast_horizon=30。 - 下一个预测将从 stride=30 点开始 - 我们保留每次预测的所有预测值(last_points_only=False) - 继续直到我们用完输入数据

最终我们将历史预测连接起来,得到一个单一的连续(在时间轴上)的时间序列

[12]:

backtest_en = model_en.historical_forecasts(

series=series_en_transformed,

future_covariates=day_series,

start=val_en_transformed.start_time(),

num_samples=500,

forecast_horizon=30,

stride=30,

retrain=False,

verbose=True,

last_points_only=False,

)

backtest_en = concatenate(backtest_en, axis=0)

[13]:

plt.figure(figsize=(10, 6))

val_en_transformed.plot(label="actual")

backtest_en.plot(label="backtest q0.01 - q0.99", low_quantile=0.01, high_quantile=0.99)

backtest_en.plot(label="backtest q0.05 - q0.95", low_quantile=0.05, high_quantile=0.95)

plt.legend()

[13]:

<matplotlib.legend.Legend at 0x2c0581de0>

[ ]: