Redis 作为向量数据库快速入门指南

了解如何使用Redis作为向量数据库

本快速入门指南帮助您:

- 了解什么是向量数据库

- 创建一个Redis向量数据库

- 创建向量嵌入并存储向量

- 查询数据并执行向量搜索

了解向量数据库

数据通常是非结构化的,这意味着它没有通过明确定义的模式来描述。非结构化数据的例子包括文本段落、图像、视频或音频。存储和搜索非结构化数据的一种方法是使用向量嵌入。

什么是向量? 在机器学习和人工智能中,向量是表示数据的数字序列。它们是模型的输入和输出,以数字形式封装了底层信息。向量将非结构化数据(如文本、图像、视频和音频)转换为机器学习模型可以处理的格式。

- 为什么它们重要? 向量捕捉数据中固有的复杂模式和语义含义,使其成为各种应用中的强大工具。它们使机器学习模型能够更有效地理解和操作非结构化数据。

- 增强传统搜索。 传统的关键词或词汇搜索依赖于单词或短语的精确匹配,这可能会有限制。相比之下,向量搜索或语义搜索利用了向量嵌入中捕获的丰富信息。通过将数据映射到向量空间中,相似的项目根据它们的意义被放置在彼此附近。这种方法允许更准确和有意义的搜索结果,因为它考虑了查询的上下文和语义内容,而不仅仅是使用的确切单词。

创建一个 Redis 向量数据库

你可以使用Redis Stack作为向量数据库。它允许你:

- 在哈希或JSON文档中存储向量和相关的元数据

- 创建和配置用于搜索的二级索引

- 执行向量搜索

- 更新向量和元数据

- 删除和清理

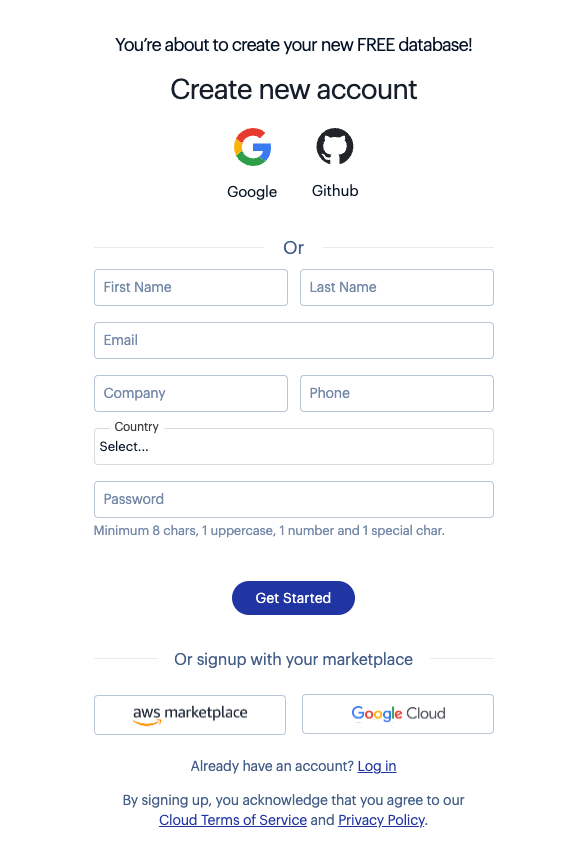

入门的最简单方法是使用Redis Cloud:

-

创建一个免费账户。

-

按照说明创建一个免费数据库。

这个免费的Redis Cloud数据库开箱即用,包含所有Redis Stack功能。

你也可以使用安装指南在你的本地机器上安装Redis Stack。

您需要为您的Redis服务器配置以下功能:JSON以及搜索和查询。

安装所需的Python包

创建一个 Python 虚拟环境并使用 pip 安装以下依赖项:

redis: 您可以在此文档站点的clients部分找到有关redis-py客户端库的更多详细信息。pandas: Pandas 是一个数据分析库。sentence-transformers: 你将使用SentenceTransformers框架来生成全文的嵌入。tabulate:pandas使用tabulate来渲染 Markdown。

你还需要在Python代码中添加以下导入:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

连接

连接到Redis。默认情况下,Redis返回二进制响应。要解码它们,您需要将decode_responses参数设置为True:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

us-east-1并监听端口16379的云数据库的连接字符串示例:redis-16379.c283.us-east-1-4.ec2.cloud.redislabs.com:16379。连接字符串的格式为host:port。您还必须复制并粘贴您的云数据库的用户名和密码。连接默认用户的代码行将更改为client = redis.Redis(host="redis-16379.c283.us-east-1-4.ec2.cloud.redislabs.com", port=16379, password="your_password_here", decode_responses=True)。准备演示数据集

本快速入门指南也使用了bikes数据集。以下是其中的一个示例文档:

{

"model": "Jigger",

"brand": "Velorim",

"price": 270,

"type": "Kids bikes",

"specs": {

"material": "aluminium",

"weight": "10"

},

"description": "Small and powerful, the Jigger is the best ride for the smallest of tikes! ..."

}

description 字段包含自行车的自由格式文本描述,并将用于创建向量嵌入。

1. 获取演示数据

你需要首先获取演示数据集作为JSON数组:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

检查其中一个自行车JSON文档的结构:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

2. 将演示数据存储在Redis中

现在遍历bikes数组,使用JSON.SET命令将数据存储为JSON文档到Redis中。以下代码使用pipeline来最小化网络往返时间:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

加载后,您可以使用JSONPath表达式从Redis中的一个JSON文档中检索特定属性:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

3. 选择一个文本嵌入模型

HuggingFace 拥有大量文本嵌入模型,这些模型可以通过 SentenceTransformers 框架在本地提供服务。这里我们使用在搜索引擎、聊天机器人和其他AI应用中广泛使用的 MS MARCO 模型。

from sentence_transformers import SentenceTransformer

embedder = SentenceTransformer('msmarco-distilbert-base-v4')

4. 生成文本嵌入

遍历所有以bikes:为前缀的Redis键:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

使用键作为输入到JSON.MGET命令,连同$.description字段,以收集描述到一个列表中。然后,将描述列表传递给.encode()方法:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

使用JSON.SET命令将向量化的描述插入到Redis中的自行车文档中。以下命令在每个文档的JSONPath $.description_embeddings下插入一个新字段。再次强调,使用管道来避免不必要的网络往返:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(

lambda x: [x.iloc[0]] + [""] * (len(x) - 1)

)

queries_table["description"] = queries_table["description"].apply(

lambda x: (x[:497] + "...") if len(x) > 500 else x

)

return queries_table.to_markdown(index=False)

query = (

Query("(*)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.54 | bikes:003...

hybrid_query = (

Query("(@brand:Peaknetic)=>[KNN 3 @vector $query_vector AS vector_score]")

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.dialect(2)

)

table = create_query_table(hybrid_query, queries, encoded_queries)

print(table)

# >>> | Best Mountain bikes for kids | 0.3 | bikes:008...

range_query = (

Query(

"@vector:[VECTOR_RANGE $range $query_vector]=>"

"{$YIELD_DISTANCE_AS: vector_score}"

)

.sort_by("vector_score")

.return_fields("vector_score", "id", "brand", "model", "description")

.paging(0, 4)

.dialect(2)

)

table = create_query_table(

range_query, queries[:1],

encoded_queries[:1],

{"range": 0.55}

)

print(table)

# >>> | Bike for small kids | 0.52 | bikes:001 | Velorim |...

使用JSON.GET命令检查其中一个更新的自行车文档:

"""

Code samples for vector database quickstart pages:

https://redis.io/docs/latest/develop/get-started/vector-database/

"""

import json

import time

import numpy as np

import pandas as pd

import requests

import redis

from redis.commands.search.field import (

NumericField,

TagField,

TextField,

VectorField,

)

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

from redis.commands.search.query import Query

from sentence_transformers import SentenceTransformer

URL = ("https://raw.githubusercontent.com/bsbodden/redis_vss_getting_started"

"/main/data/bikes.json"

)

response = requests.get(URL, timeout=10)

bikes = response.json()

json.dumps(bikes[0], indent=2)

client = redis.Redis(host="localhost", port=6379, decode_responses=True)

res = client.ping()

# >>> True

pipeline = client.pipeline()

for i, bike in enumerate(bikes, start=1):

redis_key = f"bikes:{i:03}"

pipeline.json().set(redis_key, "$", bike)

res = pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010", "$.model")

# >>> ['Summit']

keys = sorted(client.keys("bikes:*"))

# >>> ['bikes:001', 'bikes:002', ..., 'bikes:011']

descriptions = client.json().mget(keys, "$.description")

descriptions = [item for sublist in descriptions for item in sublist]

embedder = SentenceTransformer("msmarco-distilbert-base-v4")

embeddings = embedder.encode(descriptions).astype(np.float32).tolist()

VECTOR_DIMENSION = len(embeddings[0])

# >>> 768

pipeline = client.pipeline()

for key, embedding in zip(keys, embeddings):

pipeline.json().set(key, "$.description_embeddings", embedding)

pipeline.execute()

# >>> [True, True, True, True, True, True, True, True, True, True, True]

res = client.json().get("bikes:010")

# >>>

# {

# "model": "Summit",

# "brand": "nHill",

# "price": 1200,

# "type": "Mountain Bike",

# "specs": {

# "material": "alloy",

# "weight": "11.3"

# },

# "description": "This budget mountain bike from nHill performs well..."

# "description_embeddings": [

# -0.538114607334137,

# -0.49465855956077576,

# -0.025176964700222015,

# ...

# ]

# }

schema = (

TextField("$.model", no_stem=True, as_name="model"),

TextField("$.brand", no_stem=True, as_name="brand"),

NumericField("$.price", as_name="price"),

TagField("$.type", as_name="type"),

TextField("$.description", as_name="description"),

VectorField(

"$.description_embeddings",

"FLAT",

{

"TYPE": "FLOAT32",

"DIM": VECTOR_DIMENSION,

"DISTANCE_METRIC": "COSINE",

},

as_name="vector",

),

)

definition = IndexDefinition(prefix=["bikes:"], index_type=IndexType.JSON)

res = client.ft("idx:bikes_vss").create_index(fields=schema, definition=definition)

# >>> 'OK'

info = client.ft("idx:bikes_vss").info()

num_docs = info["num_docs"]

indexing_failures = info["hash_indexing_failures"]

# print(f"{num_docs} documents indexed with {indexing_failures} failures")

# >>> 11 documents indexed with 0 failures

query = Query("@brand:Peaknetic")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950', 'description_embeddings': ...

query = Query("@brand:Peaknetic").return_fields("id", "brand", "model", "price")

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:008',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Soothe Electric bike',

# 'price': '1950'

# },

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

query = Query("@brand:Peaknetic @price:[0 1000]").return_fields(

"id", "brand", "model", "price"

)

res = client.ft("idx:bikes_vss").search(query).docs

# print(res)

# >>> [

# Document {

# 'id': 'bikes:009',

# 'payload': None,

# 'brand': 'Peaknetic',

# 'model': 'Secto',

# 'price': '430'

# }

# ]

queries = [

"Bike for small kids",

"Best Mountain bikes for kids",

"Cheap Mountain bike for kids",

"Female specific mountain bike",

"Road bike for beginners",

"Commuter bike for people over 60",

"Comfortable commuter bike",

"Good bike for college students",

"Mountain bike for beginners",

"Vintage bike",

"Comfortable city bike",

]

encoded_queries = embedder.encode(queries)

len(encoded_queries)

# >>> 11

def create_query_table(query, queries, encoded_queries, extra_params=None):

"""

Creates a query table.

"""

results_list = []

for i, encoded_query in enumerate(encoded_queries):

result_docs = (

client.ft("idx:bikes_vss")

.search(

query,

{"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()}

| (extra_params if extra_params else {}),

)

.docs

)

for doc in result_docs:

vector_score = round(1 - float(doc.vector_score), 2)

results_list.append(

{

"query": queries[i],

"score": vector_score,

"id": doc.id,

"brand": doc.brand,

"model": doc.model,

"description": doc.description,

}

)

# Optional: convert the table to Markdown using Pandas

queries_table = pd.DataFrame(results_list)

queries_table.sort_values(

by=["query", "score"], ascending=[True, False], inplace=True

)

queries_table["query"] = queries_table.groupby("query")["query"].transform(