从GCM生成样本的基本示例#

图形因果模型(GCM)描述了建模变量的数据生成过程。因此,在我们拟合一个GCM之后,我们还可以从中生成全新的样本,从而可以将其视为基于底层模型的合成数据生成器。生成新样本通常可以通过按拓扑顺序排序节点,从根节点随机采样,然后通过使用随机采样的噪声评估下游因果机制来传播数据。dowhy.gcm 包提供了一个简单的辅助函数,可以自动完成此操作,并因此提供了一个简单的API来从GCM中抽取样本。

让我们看一下以下示例:

[1]:

import numpy as np, pandas as pd

X = np.random.normal(loc=0, scale=1, size=1000)

Y = 2 * X + np.random.normal(loc=0, scale=1, size=1000)

Z = 3 * Y + np.random.normal(loc=0, scale=1, size=1000)

data = pd.DataFrame(data=dict(X=X, Y=Y, Z=Z))

data.head()

[1]:

| X | Y | Z | |

|---|---|---|---|

| 0 | -0.810737 | -0.393341 | -2.842339 |

| 1 | 0.475764 | 0.753873 | 2.736163 |

| 2 | 0.652685 | -1.099695 | -0.105575 |

| 3 | -2.459928 | -3.809661 | -15.383110 |

| 4 | -0.110089 | -0.447364 | -1.085281 |

与引言中类似,我们为简单的线性DAG X→Y→Z生成数据。让我们定义GCM并将其拟合到数据中:

[2]:

import networkx as nx

import dowhy.gcm as gcm

causal_model = gcm.StructuralCausalModel(nx.DiGraph([('X', 'Y'), ('Y', 'Z')]))

gcm.auto.assign_causal_mechanisms(causal_model, data) # Automatically assigns additive noise models to non-root nodes

gcm.fit(causal_model, data)

Fitting causal mechanism of node Z: 100%|██████████| 3/3 [00:00<00:00, 457.41it/s]

我们现在学习了变量的生成模型,基于定义的因果图和加性噪声模型假设。要从这个模型生成新的样本,我们现在可以简单地调用:

[3]:

generated_data = gcm.draw_samples(causal_model, num_samples=1000)

generated_data.head()

[3]:

| X | Y | Z | |

|---|---|---|---|

| 0 | 1.265052 | 3.045911 | 7.820090 |

| 1 | 1.017337 | 0.639482 | 2.721905 |

| 2 | 0.359946 | 1.851538 | 3.884363 |

| 3 | -0.448821 | -1.161121 | -3.166857 |

| 4 | 0.125756 | 2.136710 | 8.440217 |

如果我们的建模假设是正确的,生成的数据现在应该类似于观察到的数据分布,即生成的样本对应于我们为示例数据定义的联合分布。确保这一点的一种方法是估计观察到的分布和生成的分布之间的KL散度。为此,我们可以利用评估模块:

[4]:

print(gcm.evaluate_causal_model(causal_model, data, evaluate_causal_mechanisms=False, evaluate_invertibility_assumptions=False))

Test permutations of given graph: 100%|██████████| 6/6 [00:00<00:00, 18.71it/s]

Evaluated and the overall average KL divergence between generated and observed distribution and the graph structure. The results are as follows:

==== Evaluation of Generated Distribution ====

The overall average KL divergence between the generated and observed distribution is 0.02997159374891036

The estimated KL divergence indicates an overall very good representation of the data distribution.

==== Evaluation of the Causal Graph Structure ====

+-------------------------------------------------------------------------------------------------------+

| Falsification Summary |

+-------------------------------------------------------------------------------------------------------+

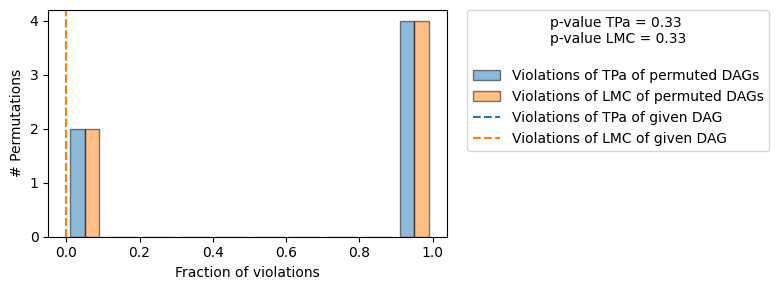

| The given DAG is not informative because 2 / 6 of the permutations lie in the Markov |

| equivalence class of the given DAG (p-value: 0.33). |

| The given DAG violates 0/1 LMCs and is better than 66.7% of the permuted DAGs (p-value: 0.33). |

| Based on the provided significance level (0.2) and because the DAG is not informative, |

| we do not reject the DAG. |

+-------------------------------------------------------------------------------------------------------+

==== NOTE ====

Always double check the made model assumptions with respect to the graph structure and choice of causal mechanisms.

All these evaluations give some insight into the goodness of the causal model, but should not be overinterpreted, since some causal relationships can be intrinsically hard to model. Furthermore, many algorithms are fairly robust against misspecifications or poor performances of causal mechanisms.

这证实了生成的分布与观察到的分布接近。

虽然评估也为我们提供了对因果图结构的见解,但我们无法确认图结构,只能在我们观察到的结构的依赖关系与图所表示的内容之间发现不一致时拒绝它。在我们的案例中,我们没有拒绝DAG,但还有其他等效的DAG也不会被拒绝。要理解这一点,请考虑上面的例子 - X→Y→Z 和 X←Y←Z 会生成相同的观测分布(因为它们编码了相同的条件),但只有 X→Y→Z 会生成正确的干预分布(例如,当对 Y 进行干预时)。