在微服务架构中寻找延迟升高的根本原因#

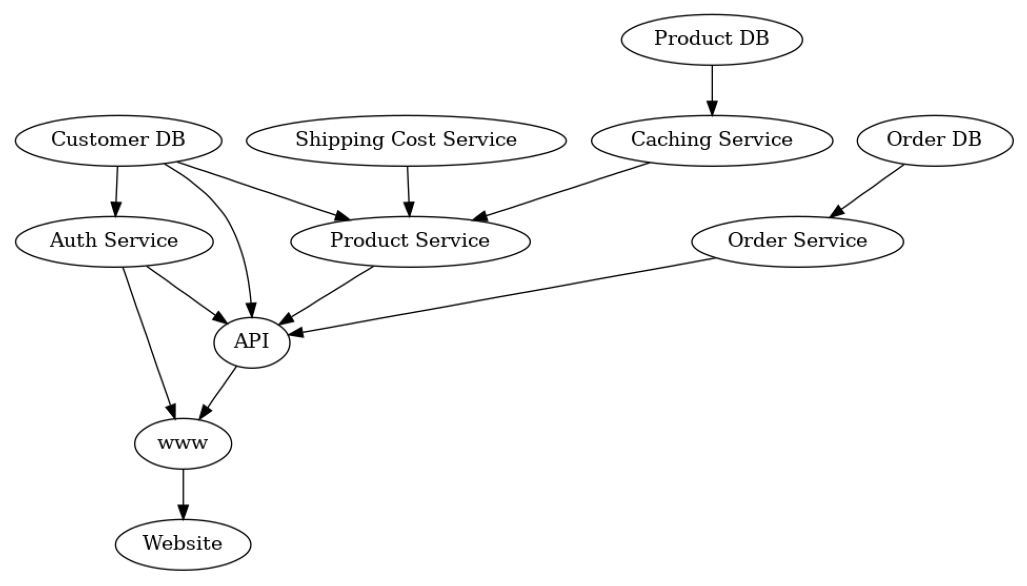

在本案例研究中,我们确定了在线商店云服务中“意外”观察到的延迟的根本原因。我们专注于下单过程,该过程涉及不同的服务,以确保所下的订单有效、客户身份验证、正确计算运费,并相应地启动发货过程。服务的依赖关系如下图所示。

这种依赖关系图可以从像Amazon X-Ray这样的服务中获取,或者根据请求的跟踪结构手动定义。

我们假设上述依赖图是正确的,并且我们能够测量每个节点对于订单请求的延迟(以秒为单位)。在Website的情况下,延迟将表示直到显示订单确认的时间。为了简化,我们假设服务是同步的,即服务必须等待下游服务才能继续。此外,我们假设两个节点不会同时受到未观察到的因素(隐藏的混杂因素)的影响(即因果充分性)。看到例如网络流量影响多个服务,这一假设在现实场景中通常可能被违反。然而,较弱的混杂因素可以忽略,而较强的混杂因素(如网络流量)可能会错误地将多个节点视为根本原因。通常,我们只能识别数据中的原因。

在这些假设下,节点的观察延迟由节点本身的延迟(固有延迟)和所有直接子节点延迟的总和定义。这也可能包括多次调用子节点。

让我们加载每个节点的观察延迟数据。

[1]:

import pandas as pd

normal_data = pd.read_csv("rca_microservice_architecture_latencies.csv")

normal_data.head()

[1]:

| 产品数据库 | 客户数据库 | 订单数据库 | 运费服务 | 缓存服务 | 产品服务 | 认证服务 | 订单服务 | API | www | 网站 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.553608 | 0.057729 | 0.153977 | 0.120217 | 0.122195 | 0.391738 | 0.399664 | 0.710525 | 2.103962 | 2.580403 | 2.971071 |

| 1 | 0.053393 | 0.239560 | 0.297794 | 0.142854 | 0.275471 | 0.545372 | 0.646370 | 0.991620 | 2.932192 | 3.804571 | 3.895535 |

| 2 | 0.023860 | 0.300044 | 0.042169 | 0.125017 | 0.152685 | 0.574918 | 0.672228 | 0.964807 | 3.106218 | 4.076227 | 4.441924 |

| 3 | 0.118598 | 0.478097 | 0.042383 | 0.143969 | 0.222720 | 0.618129 | 0.638179 | 0.938366 | 3.217643 | 4.043560 | 4.334924 |

| 4 | 0.524901 | 0.078031 | 0.031694 | 0.231884 | 0.647452 | 1.081753 | 0.388506 | 0.711937 | 2.793605 | 3.215307 | 3.255062 |

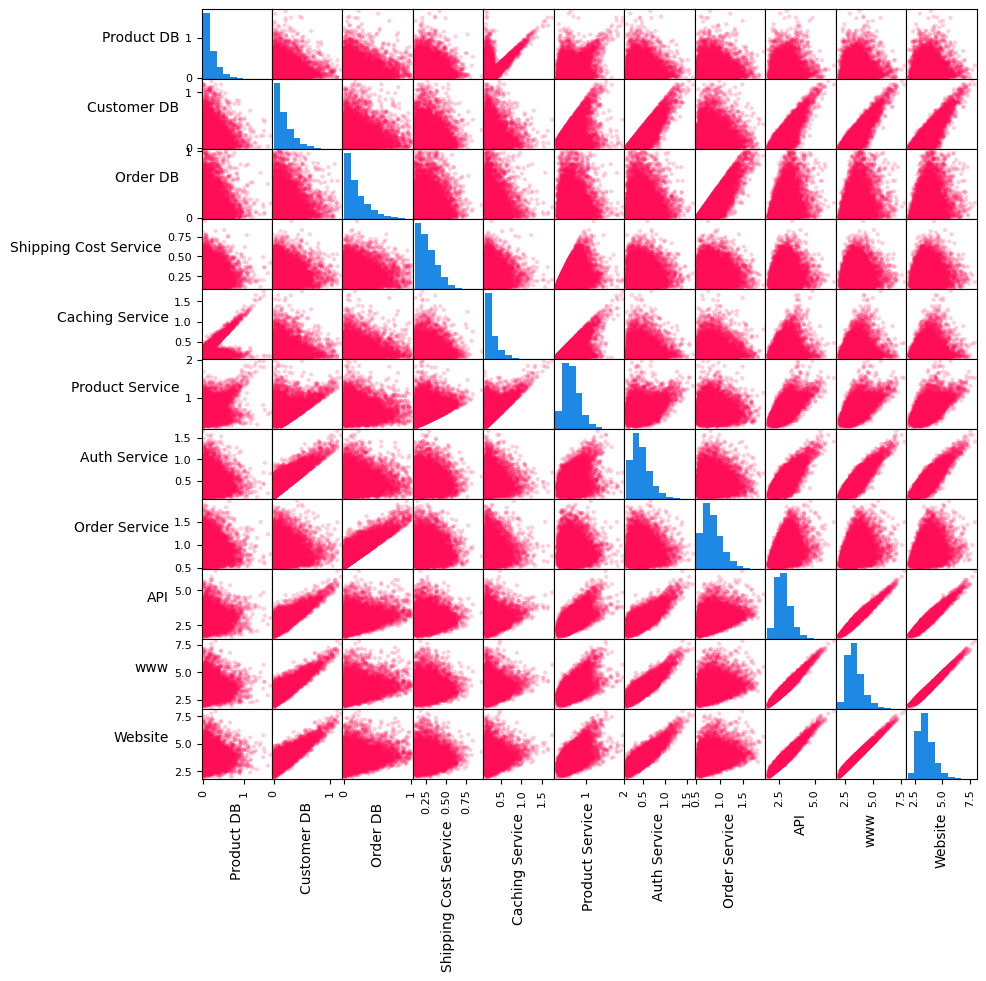

让我们也来看一下变量的成对散点图和直方图。

[2]:

axes = pd.plotting.scatter_matrix(normal_data, figsize=(10, 10), c='#ff0d57', alpha=0.2, hist_kwds={'color':['#1E88E5']});

for ax in axes.flatten():

ax.xaxis.label.set_rotation(90)

ax.yaxis.label.set_rotation(0)

ax.yaxis.label.set_ha('right')

在上面的矩阵中,对角线上的图是变量的直方图,而对角线外的图是变量对的散点图。没有依赖关系的服务的直方图,即Customer DB、Product DB、Order DB和Shipping Cost Service,其形状类似于高斯分布的一半。各种变量对的散点图(例如,API和www、www和Website、Order Service和Order DB)显示出线性关系。我们将很快使用这些信息为因果图中的节点分配生成因果模型。

设置因果模型#

如果我们查看Website节点,很明显我们在那里经历的延迟取决于所有下游节点的延迟。特别是,如果其中一个下游节点花费了很长时间,Website也将花费很长时间来显示更新。看到这一点,可以通过反转服务图的箭头来构建延迟的因果图。

[3]:

import networkx as nx

from dowhy import gcm

from dowhy.utils import plot, bar_plot

gcm.util.general.set_random_seed(0)

causal_graph = nx.DiGraph([('www', 'Website'),

('Auth Service', 'www'),

('API', 'www'),

('Customer DB', 'Auth Service'),

('Customer DB', 'API'),

('Product Service', 'API'),

('Auth Service', 'API'),

('Order Service', 'API'),

('Shipping Cost Service', 'Product Service'),

('Caching Service', 'Product Service'),

('Product DB', 'Caching Service'),

('Customer DB', 'Product Service'),

('Order DB', 'Order Service')])

[4]:

plot(causal_graph, figure_size=[13, 13])

在这里,我们感兴趣的是服务之间的延迟因果关系,而不是调用服务的顺序。

我们将使用来自成对散点图和直方图的信息来手动分配因果模型。特别是,我们将半正态分布分配给根节点(即Customer DB、Product DB、Order DB和Shipping Cost Service)。对于非根节点,我们分配线性加性噪声模型(许多父子对的散点图表明)以及噪声项的经验分布。

[5]:

from scipy.stats import halfnorm

causal_model = gcm.StructuralCausalModel(causal_graph)

for node in causal_graph.nodes:

if len(list(causal_graph.predecessors(node))) > 0:

causal_model.set_causal_mechanism(node, gcm.AdditiveNoiseModel(gcm.ml.create_linear_regressor()))

else:

causal_model.set_causal_mechanism(node, gcm.ScipyDistribution(halfnorm))

另外,如果我们没有先验知识或不熟悉统计含义,我们也可以自动化这个过程:

gcm.auto.assign_causal_mechanisms(causal_model, normal_data)

在我们继续第一个场景之前,让我们首先评估我们的因果模型:

[6]:

gcm.fit(causal_model, normal_data)

print(gcm.evaluate_causal_model(causal_model, normal_data))

Fitting causal mechanism of node Order DB: 100%|██████████| 11/11 [00:00<00:00, 74.45it/s]

Evaluating causal mechanisms...: 100%|██████████| 11/11 [00:02<00:00, 4.57it/s]

Test permutations of given graph: 100%|██████████| 50/50 [01:13<00:00, 1.47s/it]

Evaluated the performance of the causal mechanisms and the invertibility assumption of the causal mechanisms and the overall average KL divergence between generated and observed distribution and the graph structure. The results are as follows:

==== Evaluation of Causal Mechanisms ====

The used evaluation metrics are:

- KL divergence (only for root-nodes): Evaluates the divergence between the generated and the observed distribution.

- Mean Squared Error (MSE): Evaluates the average squared differences between the observed values and the conditional expectation of the causal mechanisms.

- Normalized MSE (NMSE): The MSE normalized by the standard deviation for better comparison.

- R2 coefficient: Indicates how much variance is explained by the conditional expectations of the mechanisms. Note, however, that this can be misleading for nonlinear relationships.

- F1 score (only for categorical non-root nodes): The harmonic mean of the precision and recall indicating the goodness of the underlying classifier model.

- (normalized) Continuous Ranked Probability Score (CRPS): The CRPS generalizes the Mean Absolute Percentage Error to probabilistic predictions. This gives insights into the accuracy and calibration of the causal mechanisms.

NOTE: Every metric focuses on different aspects and they might not consistently indicate a good or bad performance.

We will mostly utilize the CRPS for comparing and interpreting the performance of the mechanisms, since this captures the most important properties for the causal model.

--- Node Customer DB

- The KL divergence between generated and observed distribution is 0.026730847930932323.

The estimated KL divergence indicates an overall very good representation of the data distribution.

--- Node Shipping Cost Service

- The KL divergence between generated and observed distribution is 0.0.

The estimated KL divergence indicates an overall very good representation of the data distribution.

--- Node Product DB

- The KL divergence between generated and observed distribution is 0.04309162176082666.

The estimated KL divergence indicates an overall very good representation of the data distribution.

--- Node Order DB

- The KL divergence between generated and observed distribution is 0.03223241982914243.

The estimated KL divergence indicates an overall very good representation of the data distribution.

--- Node Auth Service

- The MSE is 0.014405407564615797.

- The NMSE is 0.5465495860903358.

- The R2 coefficient is 0.7012359660103364.

- The normalized CRPS is 0.30265499391971407.

The estimated CRPS indicates a good model performance.

--- Node Caching Service

- The MSE is 0.02313410655739081.

- The NMSE is 0.8421286359832795.

- The R2 coefficient is 0.2907975654579837.

- The normalized CRPS is 0.46264654494762336.

The estimated CRPS indicates only a fair model performance. Note, however, that a high CRPS could also result from a small signal to noise ratio.

--- Node Order Service

- The MSE is 0.014402623661901339.

- The NMSE is 0.5485279868056235.

- The R2 coefficient is 0.6990119423108653.

- The normalized CRPS is 0.30321497413904863.

The estimated CRPS indicates a good model performance.

--- Node Product Service

- The MSE is 0.020441295317239063.

- The NMSE is 0.6498526098564431.

- The R2 coefficient is 0.5775517273698512.

- The normalized CRPS is 0.3643692225262228.

The estimated CRPS indicates only a fair model performance. Note, however, that a high CRPS could also result from a small signal to noise ratio.

--- Node API

- The MSE is 0.014478740667166996.

- The NMSE is 0.20970446018379812.

- The R2 coefficient is 0.9559977978951697.

- The normalized CRPS is 0.11586910197147379.

The estimated CRPS indicates a very good model performance.

--- Node www

- The MSE is 0.011040360284322542.

- The NMSE is 0.1375983208617472.

- The R2 coefficient is 0.9810608560286648.

- The normalized CRPS is 0.0779437689368548.

The estimated CRPS indicates a very good model performance.

--- Node Website

- The MSE is 0.020774228074041143.

- The NMSE is 0.185120350663801.

- The R2 coefficient is 0.9657171988034176.

- The normalized CRPS is 0.10227892839479753.

The estimated CRPS indicates a very good model performance.

==== Evaluation of Invertible Functional Causal Model Assumption ====

--- The model assumption for node www is not rejected with a p-value of 1.0 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might be valid.

--- The model assumption for node Website is not rejected with a p-value of 1.0 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might be valid.

--- The model assumption for node Auth Service is not rejected with a p-value of 0.46642517211292034 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might be valid.

--- The model assumption for node API is rejected with a p-value of 0.010732197989039571 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might not be valid. This is, the relationship cannot be represent with this type of mechanism or there is a hidden confounder between the node and its parents.

--- The model assumption for node Product Service is rejected with a p-value of 0.0 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might not be valid. This is, the relationship cannot be represent with this type of mechanism or there is a hidden confounder between the node and its parents.

--- The model assumption for node Order Service is not rejected with a p-value of 1.0 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might be valid.

--- The model assumption for node Caching Service is rejected with a p-value of 0.0 (after potential adjustment) and a significance level of 0.05.

This implies that the model assumption might not be valid. This is, the relationship cannot be represent with this type of mechanism or there is a hidden confounder between the node and its parents.

Note that these results are based on statistical independence tests, and the fact that the assumption was not rejected does not necessarily imply that it is correct. There is just no evidence against it.

==== Evaluation of Generated Distribution ====

The overall average KL divergence between the generated and observed distribution is 0.11748736081286179

The estimated KL divergence indicates an overall very good representation of the data distribution.

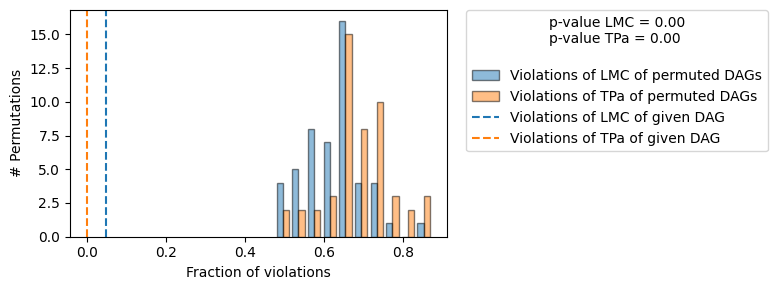

==== Evaluation of the Causal Graph Structure ====

+-------------------------------------------------------------------------------------------------------+

| Falsification Summary |

+-------------------------------------------------------------------------------------------------------+

| The given DAG is informative because 0 / 50 of the permutations lie in the Markov |

| equivalence class of the given DAG (p-value: 0.00). |

| The given DAG violates 3/63 LMCs and is better than 100.0% of the permuted DAGs (p-value: 0.00). |

| Based on the provided significance level (0.2) and because the DAG is informative, |

| we do not reject the DAG. |

+-------------------------------------------------------------------------------------------------------+

==== NOTE ====

Always double check the made model assumptions with respect to the graph structure and choice of causal mechanisms.

All these evaluations give some insight into the goodness of the causal model, but should not be overinterpreted, since some causal relationships can be intrinsically hard to model. Furthermore, many algorithms are fairly robust against misspecifications or poor performances of causal mechanisms.

这证实了我们因果模型的优良性。然而,我们也看到对于两个节点(‘产品服务’和‘缓存服务’),加性噪声模型假设被违反了。这也与数据生成过程一致,这两个节点遵循非加性噪声模型。正如我们在下面看到的,大多数算法对于这种违反或因果机制性能不佳的情况仍然相当稳健。

如需更详细的见解,请将compare_mechanism_baselines设置为True。然而,这将花费更长的时间。

场景1:观察单个异常值#

假设我们从系统中收到一个警报,显示客户在下单时经历了异常高的延迟。我们的任务是现在调查这个问题,并找出这种行为的原因。

我们首先将延迟加载到相应的警报中。

[7]:

outlier_data = pd.read_csv("rca_microservice_architecture_anomaly.csv")

outlier_data

[7]:

| 产品数据库 | 客户数据库 | 订单数据库 | 运费服务 | 缓存服务 | 产品服务 | 认证服务 | 订单服务 | API | www | 网站 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.493145 | 0.180896 | 0.192593 | 0.197001 | 2.130865 | 2.48584 | 0.533847 | 1.132151 | 4.85583 | 5.522179 | 5.572588 |

我们对客户直接体验到的Website延迟增加感兴趣。

[8]:

outlier_data.iloc[0]['Website']-normal_data['Website'].mean()

[8]:

对于这个客户,Website 平均比其他客户慢了大约2秒。为什么?

将目标服务中的异常延迟归因于其他服务#

为了回答为什么Website对这个客户来说更慢,我们将Website的异常延迟归因于因果图中的上游服务。我们建议读者参考Janzing et al., 2019以了解此API背后的科学细节。我们将计算我们归因的95%自举置信区间。特别是,我们从正常数据的随机子集中学习因果模型,并使用这些模型归因目标异常分数,重复此过程10次。这样,我们报告的置信区间考虑了(a)我们因果模型的不确定性以及(b)由于从这些因果模型中抽取的样本的方差导致的归因的不确定性。

[9]:

gcm.config.disable_progress_bars() # to disable print statements when computing Shapley values

median_attribs, uncertainty_attribs = gcm.confidence_intervals(

gcm.fit_and_compute(gcm.attribute_anomalies,

causal_model,

normal_data,

target_node='Website',

anomaly_samples=outlier_data),

num_bootstrap_resamples=10)

默认情况下,使用基于分位数的异常分数来估计样本为正常样本的负对数概率。也就是说,异常值的概率越高,分数越大。该库提供了不同类型的异常评分函数,例如z-score,其中均值是基于因果模型的期望值。

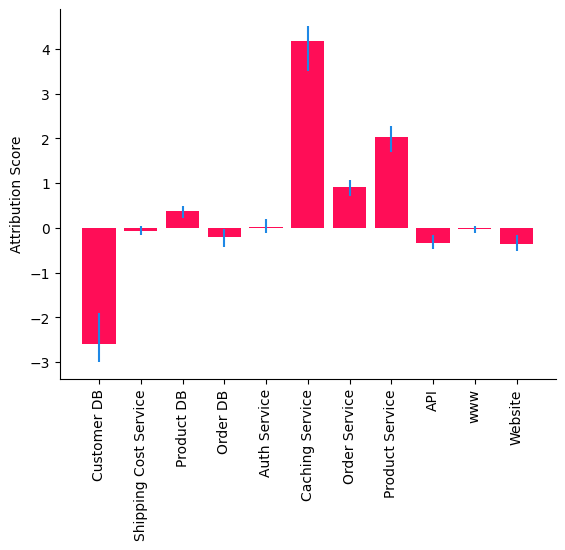

让我们在条形图中可视化属性及其不确定性。

[10]:

bar_plot(median_attribs, uncertainty_attribs, 'Attribution Score')

归因分析表明,Caching Service 是导致 Website 高延迟的主要因素,这是预期的,因为我们扰动了 Caching Service 的因果机制,以在 Website 中生成异常延迟(见下文附录)。有趣的是,Customer DB 的贡献为负,表明它特别快,减少了 Website 中的异常值。请注意,一些归因也可能是由于模型错误指定。例如,Caching Service 和 Product DB 之间的父子关系似乎表明存在两种机制。这可能是由于一个未观察到的二元变量(例如,缓存命中/未命中)对 Caching Service 产生了乘法效应。加性噪声无法捕捉到这个未观察变量的乘法效应。

场景2:观察延迟的永久性退化#

在前面的场景中,我们将Website中的一个单一异常延迟归因于因果图中的节点服务,这对于深入的个案分析非常有用。接下来,我们考虑一个场景,在这个场景中我们观察到延迟的持续下降,并且我们希望了解其驱动因素。特别是,我们将Website的平均延迟变化归因于上游节点。

假设我们获得了额外的1000个请求,其延迟较高,如下所示。

[11]:

outlier_data = pd.read_csv("rca_microservice_architecture_anomaly_1000.csv")

outlier_data.head()

[11]:

| 产品数据库 | 客户数据库 | 订单数据库 | 运费服务 | 缓存服务 | 产品服务 | 认证服务 | 订单服务 | API | www | 网站 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.140874 | 0.270117 | 0.021619 | 0.159421 | 2.201327 | 2.453859 | 0.958687 | 0.572128 | 4.921074 | 5.891927 | 5.937950 |

| 1 | 0.160903 | 0.008235 | 0.182182 | 0.114568 | 2.105901 | 2.259432 | 0.325054 | 0.683030 | 4.009969 | 4.373290 | 4.418746 |

| 2 | 0.013300 | 0.127177 | 0.591904 | 0.112362 | 2.160395 | 2.278189 | 0.645109 | 1.097460 | 4.915487 | 5.578015 | 5.708616 |

| 2 | 0.013300 | 0.127177 | 0.591904 | 0.112362 | 2.160395 | 2.278189 | 0.645109 | 1.097460 | 4.915487 | 5.578015 | 5.708616 |

| 3 | 1.317167 | 0.145850 | 0.094301 | 0.401206 | 3.505417 | 3.622197 | 0.502680 | 0.880008 | 5.652773 | 6.265665 | 6.356730 |

| 4 | 0.699519 | 0.425039 | 0.233269 | 0.572897 | 2.931482 | 3.062255 | 0.598265 | 0.885846 | 5.585744 | 6.266662 | 6.346390 |

我们关注的是客户直接体验到的Website在1000次请求中平均增加的延迟。

[12]:

outlier_data['Website'].mean() - normal_data['Website'].mean()

[12]:

网站的平均速度比平常慢(几乎慢了2秒)。为什么?

将目标服务延迟的永久性退化归因于其他服务#

为了回答为什么Website在这1000次请求中比以前更慢,我们将Website平均延迟的变化归因于因果图中的上游服务。我们建议读者参考Budhathoki等人,2021年了解此API背后的科学细节。与之前的情景一样,我们将计算归因的95%自举置信区间,并在条形图中可视化它们。

[13]:

import numpy as np

median_attribs, uncertainty_attribs = gcm.confidence_intervals(

lambda : gcm.distribution_change(causal_model,

normal_data.sample(frac=0.6),

outlier_data.sample(frac=0.6),

'Website',

difference_estimation_func=lambda x, y: np.mean(y) - np.mean(x)),

num_bootstrap_resamples = 10)

bar_plot(median_attribs, uncertainty_attribs, 'Attribution Score')

我们观察到Caching Service是导致Website变慢的根本原因。特别是,我们使用的方法告诉我们,Caching Service的因果机制(即输入输出行为)的变化(例如,缓存算法)导致了Website的变慢。这也是预期的,因为异常延迟是通过改变Caching Service的因果机制产生的(见下面的附录)。

场景3:模拟资源转移的干预#

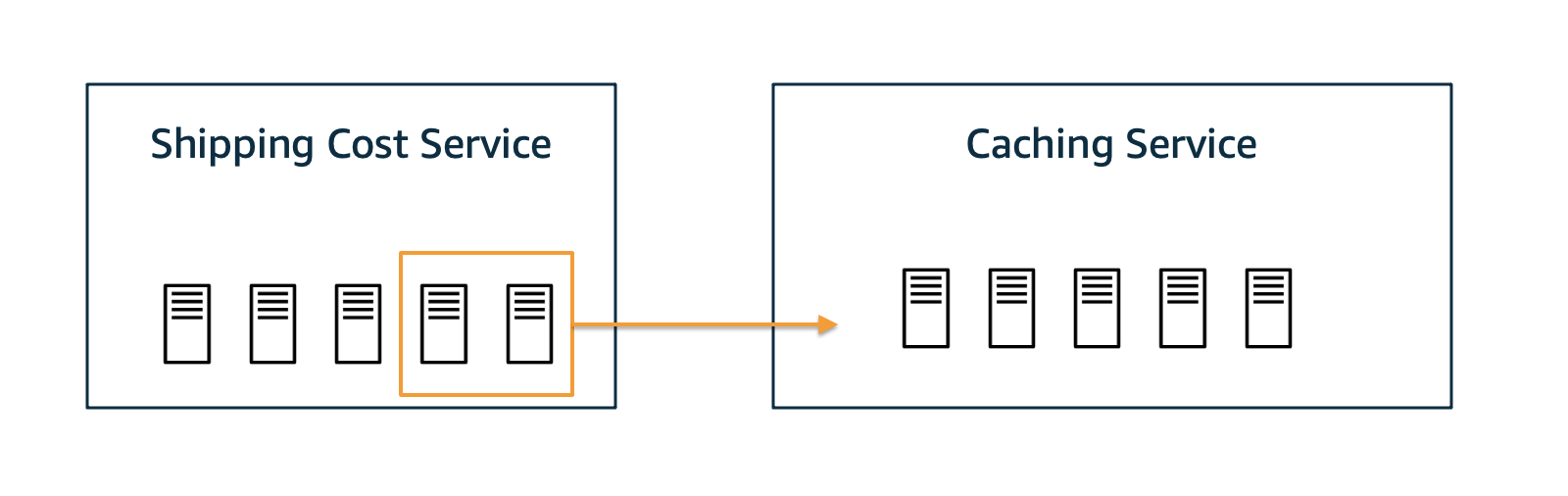

接下来,让我们想象一个场景,就像场景2中那样发生了永久性退化,并且我们已经成功地将Caching Service确定为根本原因。此外,我们发现最近部署的Caching Service包含一个导致主机过载的错误。必须部署一个适当的修复程序,或者必须回滚之前的部署。但是,在此期间,我们是否可以通过将一些资源从Shipping Service转移到Caching Service来缓解这种情况?这会有帮助吗?在现实中实施之前,让我们先模拟一下,看看是否改善了情况。

让我们进行一个干预,假设我们可以将Caching Service的平均时间减少1秒。但同时,我们通过Shipping Cost Service的平均减慢2秒来换取这个速度提升。

[14]:

median_mean_latencies, uncertainty_mean_latencies = gcm.confidence_intervals(

lambda : gcm.fit_and_compute(gcm.interventional_samples,

causal_model,

outlier_data,

interventions = {

"Caching Service": lambda x: x-1,

"Shipping Cost Service": lambda x: x+2

},

observed_data=outlier_data)().mean().to_dict(),

num_bootstrap_resamples=10)

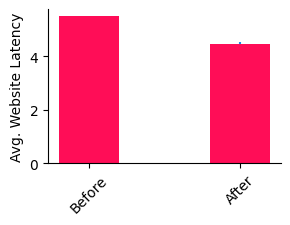

情况有所改善吗?让我们可视化结果。

[15]:

avg_website_latency_before = outlier_data.mean().to_dict()['Website']

bar_plot(dict(before=avg_website_latency_before, after=median_mean_latencies['Website']),

dict(before=np.array([avg_website_latency_before, avg_website_latency_before]), after=uncertainty_mean_latencies['Website']),

ylabel='Avg. Website Latency',

figure_size=(3, 2),

bar_width=0.4,

xticks=['Before', 'After'],

xticks_rotation=45)

确实,我们得到了大约1秒的改进。我们还没有回到正常操作,但我们已经缓解了部分问题。从这里开始,也许我们可以等待直到部署了适当的修复。

附录:数据生成过程#

上述场景适用于合成数据。正常数据是使用以下函数生成的:

[16]:

from scipy.stats import truncexpon, halfnorm

def create_observed_latency_data(unobserved_intrinsic_latencies):

observed_latencies = {}

observed_latencies['Product DB'] = unobserved_intrinsic_latencies['Product DB']

observed_latencies['Customer DB'] = unobserved_intrinsic_latencies['Customer DB']

observed_latencies['Order DB'] = unobserved_intrinsic_latencies['Order DB']

observed_latencies['Shipping Cost Service'] = unobserved_intrinsic_latencies['Shipping Cost Service']

observed_latencies['Caching Service'] = np.random.choice([0, 1], size=(len(observed_latencies['Product DB']),),

p=[.5, .5]) * \

observed_latencies['Product DB'] \

+ unobserved_intrinsic_latencies['Caching Service']

observed_latencies['Product Service'] = np.maximum(np.maximum(observed_latencies['Shipping Cost Service'],

observed_latencies['Caching Service']),

observed_latencies['Customer DB']) \

+ unobserved_intrinsic_latencies['Product Service']

observed_latencies['Auth Service'] = observed_latencies['Customer DB'] \

+ unobserved_intrinsic_latencies['Auth Service']

observed_latencies['Order Service'] = observed_latencies['Order DB'] \

+ unobserved_intrinsic_latencies['Order Service']

observed_latencies['API'] = observed_latencies['Product Service'] \

+ observed_latencies['Customer DB'] \

+ observed_latencies['Auth Service'] \

+ observed_latencies['Order Service'] \

+ unobserved_intrinsic_latencies['API']

observed_latencies['www'] = observed_latencies['API'] \

+ observed_latencies['Auth Service'] \

+ unobserved_intrinsic_latencies['www']

observed_latencies['Website'] = observed_latencies['www'] \

+ unobserved_intrinsic_latencies['Website']

return pd.DataFrame(observed_latencies)

def unobserved_intrinsic_latencies_normal(num_samples):

return {

'Website': truncexpon.rvs(size=num_samples, b=3, scale=0.2),

'www': truncexpon.rvs(size=num_samples, b=2, scale=0.2),

'API': halfnorm.rvs(size=num_samples, loc=0.5, scale=0.2),

'Auth Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Product Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Order Service': halfnorm.rvs(size=num_samples, loc=0.5, scale=0.2),

'Shipping Cost Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Caching Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.1),

'Order DB': truncexpon.rvs(size=num_samples, b=5, scale=0.2),

'Customer DB': truncexpon.rvs(size=num_samples, b=6, scale=0.2),

'Product DB': truncexpon.rvs(size=num_samples, b=10, scale=0.2)

}

normal_data = create_observed_latency_data(unobserved_intrinsic_latencies_normal(10000))

这模拟了在假设服务同步且没有同时影响两个节点的隐藏因素的情况下的延迟关系。此外,我们假设缓存服务仅在50%的情况下需要调用产品数据库(即我们有50%的缓存未命中率)。另外,我们假设产品服务可以并行调用其下游服务运费服务、缓存服务和客户数据库,并在所有三个服务都返回时合并线程。

我们使用截断指数分布和半正态分布,因为它们的形状与在实际服务中观察到的分布相似。

异常数据按以下方式生成:

[17]:

def unobserved_intrinsic_latencies_anomalous(num_samples):

return {

'Website': truncexpon.rvs(size=num_samples, b=3, scale=0.2),

'www': truncexpon.rvs(size=num_samples, b=2, scale=0.2),

'API': halfnorm.rvs(size=num_samples, loc=0.5, scale=0.2),

'Auth Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Product Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Order Service': halfnorm.rvs(size=num_samples, loc=0.5, scale=0.2),

'Shipping Cost Service': halfnorm.rvs(size=num_samples, loc=0.1, scale=0.2),

'Caching Service': 2 + halfnorm.rvs(size=num_samples, loc=0.1, scale=0.1),

'Order DB': truncexpon.rvs(size=num_samples, b=5, scale=0.2),

'Customer DB': truncexpon.rvs(size=num_samples, b=6, scale=0.2),

'Product DB': truncexpon.rvs(size=num_samples, b=10, scale=0.2)

}

outlier_data = create_observed_latency_data(unobserved_intrinsic_latencies_anomalous(1000))

在这里,我们将Caching Service的平均时间显著增加了两秒,这与我们从RCA得到的结果一致。请注意,Caching Service中的高延迟会导致上游服务的延迟持续较高。特别是,客户体验到的延迟比平常更高。